Artificial intelligence assistance during upper endoscopy: a game changer in detection of esophageal squamous cell carcinoma?

Detection of superficial esophageal squamous cell carcinoma (ESCC) and precancerous lesions is of great importance, because these cancers can be treated with less invasive endoscopic resection as opposed to esophagectomy which is associated with high rates of morbidity (50–75%) and mortality (up to 5%) (1-3). Superficial ESCC often presents as a subtle flat lesion that can easily be missed during routine diagnostic white light endoscopy (WLE) (4). In the last few decades, improvements in endoscopic detection techniques have been made. For example, Lugol dye chromoendoscopy (LCE) and narrow-band imaging (NBI) have been introduced (5,6). In LCE, iodine binds to glycogen which is diminished or absent in dysplastic/neoplastic tissue and is highly present in normal squamous epithelium. This results in less intensely stained areas of the esophagus that contain dysplastic/neoplastic tissue. NBI is based on narrowing the bandwidth of spectral transmittance of optical filters, resulting in emphasis on capillary vessels in endoscopic images in real time. Unfortunately, still a considerable segment of malignant lesions are missed due to for instance biopsy sampling error and interobserver variability in the detection of lesions. The use of artificial intelligence (AI) technology might have the potential to overcome these hurdles and increase work productivity and efficiency.

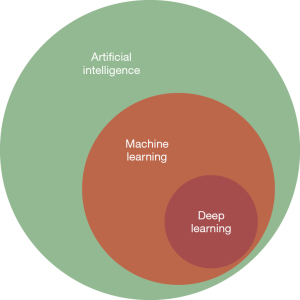

AI-assisted endoscopy is based on computer algorithms that perform like humans. The principle of this technique is machine learning (ML), i.e., a general term for teaching computer algorithms to recognize patterns in data (7,8). In this way, the AI system is learning and improving from experience with human-made training data without being explicitly programmed to with the intended result to be comparable or superior to the performance of humans. Deep learning (DL) is one approach of ML inspired by the biological neural network of human brains using a layered structure of algorithms (7) (Figure 1). The convolutional neural network (CNN) provides learning from its own mistakes to correct its errors.

The importance of AI in the field of gastroenterology has gained more and more recognition in the last few years. Since 2019, annual multidisciplinary meetings with a variety of experts (either academic, industrial, or regulatory) in diverse fields, including gastroenterology, are being held. During these meetings, studies in gastroenterology and AI are presented, hiatus in knowledge are identified and recommendations are made for research that would have the highest impact on implementation of AI (9).

When reading the article written by Yuan et al. (10) using a DL AI system in the issue of The Lancet Gastroenterology & Hepatology, questions arise whether an AI system is in fact equal or even superior to expert endoscopists when detecting ESCC at this moment in time and what developments the future will hold. Before we start implementing AI systems in all upper endoscopies based on current literature and the study by Yuan et al., we have to consider three fundamental tasks that lead to diagnosis of lesions where AI could be of assistance for endoscopists: quality assessment, detection of lesions and characterization of lesions. Is an AI system in all three steps of additional value for upper endoscopy or are there differences for the separate tasks?

Quality assessment

There are different human factors that can impair the quality of an upper endoscopy. For example, quick inspection, limited experience of the endoscopist, poor tolerance of the patient, and anatomically challenging areas can contribute to failure of complete assessment of the upper gastrointestinal (GI) tract.

Several studies have investigated improvement of quality assessment by photodocumentation. A publication of the Quality Improvement Initiative of the European Society for Gastrointestinal Endoscopy (ESGE) recommends documentation of all normal anatomical landmarks (proximal and distal esophagus, Z-line, diaphragm indentation, cardia and fundus in inversion, corpus in forward view including lesser curvature, corpus in retroflex view including greater curvature, angulus in partial inversion, antrum, duodenal bulb, and second part of duodenum) and abnormal findings (11). AI systems based on the abovementioned anatomical landmarks have been developed to assist with photodocumentation and assessment of completeness of endoscopy. In 2019, Wu et al. introduced a real-time quality-improving system to monitor blind spots, time the procedure, and automatically generate photodocumentation during upper endoscopy (12). Patients were randomized into either upper endoscopy with or without the AI system. An accuracy of 90% was reported for endoscopies with the AI system and the blind spot rate was significantly lower compared to the control group. Next, Choi et al. [2022] reported successful classification of gastroscopy images into different landmarks by an AI-driven quality control system with 98% accuracy and evaluated completeness of gastroscopy with 89% accuracy (13). Making a comparative analysis of the performances of AI systems is unfortunately often not possible. This is due to diversity in for example classification of landmarks, the number of images used, the selection of informative frames, and the use of single vs. multiple frames algorithms, implying that potential problems addressed by the different AI models can have different degrees of complexity (14). The study by Yuan et al. in The Lancet Gastroenterology & Hepatology does not elaborate on photodocumentation for quality assessment of the procedures that were performed (10). They did however mention exclusion of patients where observation of the entire esophagus was incomplete.

To further improve quality of upper endoscopy, the ESGE guideline recommends a minimum duration of 7 minutes from intubation to extubation and Asian guidelines recommend a minimum duration of 8 minutes (11). Inspection time was a lot shorter than the recommended 7 or 8 minutes in the before mentioned study, but not significantly different with 77 seconds for the AI group and 76 seconds for the non-AI group (10). Nevertheless, detection rates were not lower than in previous literature.

Detection of lesions

Most studies on AI for upper gastroscopy originate from Asia and mainly focus on detection of squamous dysplasia/ESCC with different study designs. Horie et al. (15) showed that a DL AI system for detection of esophageal cancer (both adenocarcinoma and squamous cell carcinoma) can accurately detect lesions with a sensitivity of 98% based on more than 9,500 images for development and validation of the system. In addition, the AI system was able to detect lesions <10 mm that are easily overlooked by expert endoscopists.

As mentioned before, endoscopic screening for ESCC is challenging due to its susceptibility to interobserver variability, especially between expert and trainee endoscopists (sensitivity 100% vs. 53%) (16). Cai and colleagues developed a CNN-AI system based on WLE images to detect (pre)cancerous lesions comparing three different levels of experienced endoscopists (>15, 5–15, and <5 years of experience). Sensitivity of the CNN system was higher compared to all three groups with 98% vs. 86%, 79%, and 62%, respectively (17). Fukuda et al. divided the diagnostic process for ESCC using a CNN-DL system in two steps: identification of suspicious lesions and differentiating cancer from no cancer. For both parameters, the sensitivity appeared significantly higher for the AI system than for expert endoscopists (18). Some limitations are present in the mentioned studies: in the latter two studies, a small number of images was used for training and validating the AI systems. For all three studies, poor-quality images were excluded. In this way, real-life clinical practice could not be simulated. Furthermore, the study by Fukuda et al. included only expert endoscopists, so a conclusion about implementation for less experienced endoscopists could not be drawn. Though, it is likely that an even higher additional value for AI in gastroscopies performed by less experienced endoscopists would be achieved.

In the issue of The Lancet Gastroenterology and Hepatology, Yuan et al. had a different strategy to investigate the use of AI in diagnosing ESCC. They looked at the miss rate of superficial ESCC and precancerous lesions, calculated on a per-lesion and per-patient basis. In total, 12 different hospitals in China participated in this randomized controlled trial (10). Patients were randomly assigned to either the AI-first group or the routine-first group and the same endoscopist did tandem upper gastroscopy for each patient on the same day. The difference between the two groups was the order of performing the gastroscopies; with or without the AI system. The per-lesion and per-patient miss rate of superficial ESCC and precancerous lesions was not significantly reduced by the implementation of the AI system. However, the detection rate in the AI-first group was significantly higher than in the routine-first group. The overall detection rate was, surprisingly, highest in secondary class A hospitals (compared to tertiary class A or B hospitals). This might be explained by the fact that only two of the 12 participating hospitals were secondary class A hospitals and that they didn’t include junior endoscopists (vs. 15 junior endoscopists in the other hospitals). Although a fair comparison to other studies due to lacking prospective, randomized literature could not be made, the AI system was significantly more effective in detection of lesions <10 mm in line with the article of Horie et al. (15).

One could argue the method of the study by Yuan et al., also mentioned in their own discussion. It is a major limitation that both endoscopies in the same patient were performed by the same endoscopist, leading to intraobserver bias. As a solution, they propose performing two consecutive gastroscopies by different endoscopists, which would shift the intra- to interobserver variability, and thereby shift from one to another limitation. Our proposal would be to set up a study in which a first upper endoscopy is performed with assistance of AI, and a second upper endoscopy is performed by the same endoscopist without the assistance of AI for example 2–4 weeks later. In that way, intra- and interobserver variability may be optimally prevented.

Interestingly, in the subgroup analysis mentioned in the supplementary (p19–20), the detection rate was higher when using Olympus 260 endoscopes compared to the newer Olympus 290 endoscopes. This result shows the potential additional value of using AI especially for lower definition endoscopes. However, this should be validated in a different cohort since the number of lesions that were found overall are small in both groups. In a study by Park et al., the same endoscopes were compared for detection of the gastroesophageal junction with AI showing similar performance for 260 and 290 endoscopes (19). Further research to determine the value of AI of different definition endoscopes is needed in the future.

Another noteworthy subject is the type of lesions that were additionally found by the AI system in the routine-first group. When looking at Tab. 2, 4/6 lesions that were found by AI and missed by endoscopists were low-grade intraepithelial neoplasia. Guidelines suggest no immediate treatment for low-grade dysplasia, thus the question arises whether AI is of true contribution for detection of lesions that are of greater importance and in need of treatment. Nevertheless, low-grade dysplasia cannot be ignored: in a recent, nationwide cohort study from the Netherlands, the risk of developing ESCC from squamous dysplasia is substantial (up to 8.5%) and hence endoscopic surveillance is indicated (20).

Characterization of lesions

Once a lesion is detected, the next step is to characterize it. Making a distinction between early and advanced cancer is of great significance, as this will determine the treatment modality. Early cancer that is invading only the mucosa and superficial submucosa is considered suitable for endoscopic treatment compared to more advanced cancer that requires chemoradiation and/or esophagectomy.

A system that is often used to identify a lesion is the international Paris classification, which classifies superficial lesions into subtypes depending on protrusion and excavation. Based on this classification, risks of lymph node metastases can be determined. For example, a flat (0–IIa) or slightly elevated (0–IIb) lesion has a smaller risk of lymph node metastasis compared to depressed lesions (0–IIc). Unfortunately, no studies on the use of AI for classifying a lesion into the different Paris subtypes are available. However, a study by Meng et al. on development of an AI system for detection of ESCC and investigating its application value showed that flat lesions (Paris type 0–IIb) were more likely to be inaccurately detected by the AI system (21).

AI-assisted staging of ESCC through determination of invasion depth with WLE, NBI, or (virtual) chromoendoscopy through the assessment of microvascular features such as intraepithelial papillary capillary loop (IPCL) patterns has been investigated to a large extent. The independent performance of the DL systems that were used in two Japanese studies from 2019 to 2020 appeared to be high with sensitivities ranging between 80% and 90% (22,23). Furthermore, Shimamoto et al. developed an AI model from endoscopic videos to estimate invasion depth compared to experienced endoscopists (varying from 7 to 25 years of experience). In their study, the AI system outperformed the experienced endoscopists with higher sensitivity, specificity, and accuracy (71% vs. 42%, 95% vs. 97%, and 89% vs. 84%) (24). Conform the limitations mentioned in the paragraph before, these studies validated AI systems based on only high-quality images/videos, thus not representing real-life clinical practice.

Conclusions

The implementation of AI systems in upper GI endoscopy is at a rather early stage compared to that for the lower GI tract. However, several studies have demonstrated its feasibility and additional value with promising results. The endoscopic tasks where AI can be of assistance entail endoscopic quality assessment, detection of lesions, and characterization of lesions. To establish quality assurance and strengthen the confidence in AI systems, there is definitely a need for unified, optimal benchmarking in these three main tasks that still needs to be established. Most reports have focused on retrospectively collected images and data from large, prospective, randomized trials are lacking. The results of the prospective, randomized study by Yuan et al. are very interesting and definitely add to our knowledge about the additional value of AI for detection of ESCC. The study supports the use of AI but does not show superiority of the per-lesion or per-patient miss rate for AI-first vs. routine-first endoscopy. The detection rate, however, appeared to be significantly higher in the AI-first group.

What is seen in other recent literature is that especially for less experienced endoscopists, AI could be of additional value by decreasing the miss rate to potentially become comparable to that of experienced endoscopists. Additionally, the yield of biopsies by indicating optimal biopsy sites during real-time AI-assisted endoscopy could be improved. More accurate prediction of tumor invasion depth with AI assistance may also overcome the problem of unnecessary referrals to the surgical department for patients that are actually suitable for endoscopic resection.

In the future, it would be desirable that AI-assisted endoscopy will be compared with unassisted endoscopy in larger, prospective, randomized real-time studies. These studies should investigate the additional value of AI while looking at detection rate between higher and lower resolution endoscopes and the different type of lesions (low-grade dysplasia, high-grade dysplasia, cancer).

Acknowledgments

Funding: None.

Footnote

Provenance and Peer Review: This article was commissioned by the editorial office, Chinese Clinical Oncology. The article has undergone external peer review.

Peer Review File: Available at https://cco.amegroups.com/article/view/10.21037/cco-24-46/prf

Conflicts of Interest: Both authors have completed the ICMJE uniform disclosure form (available at https://cco.amegroups.com/article/view/10.21037/cco-24-46/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Low DE, Kuppusamy MK, Alderson D, et al. Benchmarking Complications Associated with Esophagectomy. Ann Surg 2019;269:291-8. [Crossref] [PubMed]

- Shen KR, Harrison-Phipps KM, Cassivi SD, et al. Esophagectomy after anti-reflux surgery. J Thorac Cardiovasc Surg 2010;139:969-75. [Crossref] [PubMed]

- Fitzgerald RC, di Pietro M, Ragunath K, et al. British Society of Gastroenterology guidelines on the diagnosis and management of Barrett's oesophagus. Gut 2014;63:7-42. [Crossref] [PubMed]

- Rodríguez de Santiago E, Hernanz N, Marcos-Prieto HM, et al. Rate of missed oesophageal cancer at routine endoscopy and survival outcomes: A multicentric cohort study. United European Gastroenterol J 2019;7:189-98. [Crossref] [PubMed]

- Voegeli R. Schiller's iodine test in the diagnosis of esophageal diseases. Preliminary report. Pract Otorhinolaryngol (Basel) 1966;28:230-9. [PubMed]

- Yoshida T, Inoue H, Usui S, et al. Narrow-band imaging system with magnifying endoscopy for superficial esophageal lesions. Gastrointest Endosc 2004;59:288-95. [Crossref] [PubMed]

- El Hajjar A, Rey JF. Artificial intelligence in gastrointestinal endoscopy: general overview. Chin Med J (Engl) 2020;133:326-34. [Crossref] [PubMed]

- Tokat M, van Tilburg L, Koch AD, et al. Artificial Intelligence in Upper Gastrointestinal Endoscopy. Dig Dis 2022;40:395-408. [Crossref] [PubMed]

- Parasa S, Wallace M, Bagci U, et al. Gastrointest Endosc 2020;92:938-945.e1. [Crossref] [PubMed]

- Yuan XL, Liu W, Lin YX, et al. Effect of an artificial intelligence-assisted system on endoscopic diagnosis of superficial oesophageal squamous cell carcinoma and precancerous lesions: a multicentre, tandem, double-blind, randomised controlled trial. Lancet Gastroenterol Hepatol 2024;9:34-44. [Crossref] [PubMed]

- Bisschops R, Areia M, Coron E, et al. Performance measures for upper gastrointestinal endoscopy: a European Society of Gastrointestinal Endoscopy (ESGE) Quality Improvement Initiative. Endoscopy 2016;48:843-64. [Crossref] [PubMed]

- Wu L, Zhang J, Zhou W, et al. Randomised controlled trial of WISENSE, a real-time quality improving system for monitoring blind spots during esophagogastroduodenoscopy. Gut 2019;68:2161-9. [Crossref] [PubMed]

- Choi SJ, Khan MA, Choi HS, et al. Development of artificial intelligence system for quality control of photo documentation in esophagogastroduodenoscopy. Surg Endosc 2022;36:57-65. [Crossref] [PubMed]

- Lazăr DC, Avram MF, Faur AC, et al. The Impact of Artificial Intelligence in the Endoscopic Assessment of Premalignant and Malignant Esophageal Lesions: Present and Future. Medicina (Kaunas) 2020;56:364. [Crossref] [PubMed]

- Horie Y, Yoshio T, Aoyama K, et al. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest Endosc 2019;89:25-32. [Crossref] [PubMed]

- Ishihara R, Takeuchi Y, Chatani R, et al. Prospective evaluation of narrow-band imaging endoscopy for screening of esophageal squamous mucosal high-grade neoplasia in experienced and less experienced endoscopists. Dis Esophagus 2010;23:480-6. [Crossref] [PubMed]

- Cai SL, Li B, Tan WM, et al. Using a deep learning system in endoscopy for screening of early esophageal squamous cell carcinoma (with video). Gastrointest Endosc 2019;90:745-753.e2. [Crossref] [PubMed]

- Fukuda H, Ishihara R, Kato Y, et al. Comparison of performances of artificial intelligence versus expert endoscopists for real-time assisted diagnosis of esophageal squamous cell carcinoma (with video). Gastrointest Endosc 2020;92:848-55. [Crossref] [PubMed]

- Park J, Hwang Y, Kim HG, et al. Reduced detection rate of artificial intelligence in images obtained from untrained endoscope models and improvement using domain adaptation algorithm. Front Med (Lausanne) 2022;9:1036974. [Crossref] [PubMed]

- van Tilburg L, Spaander MCW, Bruno MJ, et al. Increased risk of esophageal squamous cell carcinoma in patients with squamous dysplasia: a nationwide cohort study in the Netherlands. Dis Esophagus 2023;36:doad045. [Crossref] [PubMed]

- Meng QQ, Gao Y, Lin H, et al. Application of an artificial intelligence system for endoscopic diagnosis of superficial esophageal squamous cell carcinoma. World J Gastroenterol 2022;28:5483-93. [Crossref] [PubMed]

- Nakagawa K, Ishihara R, Aoyama K, et al. Classification for invasion depth of esophageal squamous cell carcinoma using a deep neural network compared with experienced endoscopists. Gastrointest Endosc 2019;90:407-14. [Crossref] [PubMed]

- Tokai Y, Yoshio T, Aoyama K, et al. Application of artificial intelligence using convolutional neural networks in determining the invasion depth of esophageal squamous cell carcinoma. Esophagus 2020;17:250-6. [Crossref] [PubMed]

- Shimamoto Y, Ishihara R, Kato Y, et al. Real-time assessment of video images for esophageal squamous cell carcinoma invasion depth using artificial intelligence. J Gastroenterol 2020;55:1037-45. [Crossref] [PubMed]